Maximum Entropy and the Inference of Pattern and Dynamics in Ecology

JOHN HARTE

Environmental Science, Policy and Management, UC Berkeley

External Professor, Santa Fe Institute

Constrained maximization of information entropy yields least biased probability distributions. From physics to economics, from forensics to medicine, this powerful inference method has enriched science. Here we apply this method to ecology, using constraints derived from ratios of ecological state variables, and infer functional forms for the ecological metrics describing patterns in the abundance, distribution, and energetics of species. I show that a static version of the theory describes remarkably well essentially all observed patterns in quasi-steady-state systems but fails for systems undergoing rapid change. A promising stochastic-dynamic extension of the theory will also be discussed.

Markov and clonal evolution models of breast tumor growth, resistance, and migration

PAUL NEWTON

Aerospace & Mechanical Engineering, Mathematics, and Norris Comprehensive Cancer Center, University of Southern California

Abstract: Cancer is an evolutionary and entropy increasing process taking place within a genetically and functionally heterogeneous population of cells that traffic from one anatomical site to another via hematogenous and lymphatic routes. The population of cells associated with the primary and metastatic tumors evolve, adapt, proliferate, and disseminate in an environment in which a fitness landscape controls survival and replication. Our goal in this talk is to provide an increasingly complex and detailed hierarchy of mathematical models which can produce quantitative simulations of stochastically evolving populations of cancer cells competing with healthy cells in spatially structured environments (directed graphs). The cells have birth and death rates that are determined by a fitness function associated with a prisoner’s dilemma payoff matrix. Within the context of prisoner’s dilemma language, the healthy cells are the cooperators, and the cancer cells are the defectors. In an isolated tumor, the fitness of the cancer cells, hence their birth rate, surpasses that of the healthy cells, yet the overall fitness of the organ decreases as the cancer cells multiply. Each cell in the model system has a heritable numerical `genome’ (binary string) which has the ability to undergo point mutations. Permutations of the binary string determine fitness, and are course-grained into two basic cell types: healthy (low fitness) and cancerous (high fitness). Metastatic dissemination is simulated via Monte Carlo simulations on a Markov directed graph, where each anatomical site in the body is a node of the graph, and transition probabilities determine metastatic spread of the disease from node to node. The structure of the graph, the transition probabilities, the structure of the payoff matrices, and the mutational dynamics all contribute to aspects of simulated outcomes as well as likely responses to simulated therapies.

Entropy in Social Science: System and Strategic Uncertainty, Path Dependence, and System Robustness

SCOTT E PAGE

Political Science, Complex Systems, Economics

University of Michigan

Social scientists rarely use entropy as a formal measure, opting instead to use variance, a measure of dispersion. In many contexts, entropy would be the more appropriate measure. In this talk, I describe three efforts to demonstrate the usefulness of entropy in social systems. First, I should how entropy serves as a proxy for strategic uncertainty and correlates with the complexity of the learning environment. This is joint work with Jenna Bednar, Yan Chen, and Tracy Liu. Second, I describe research with PJ Lamberson in which we use entropy to construct a formal measure of path dependence. Finally, I describe a project with Jenna Bednar and Andrea Jones-Rooy in which we relate the entropy to systems robustness.

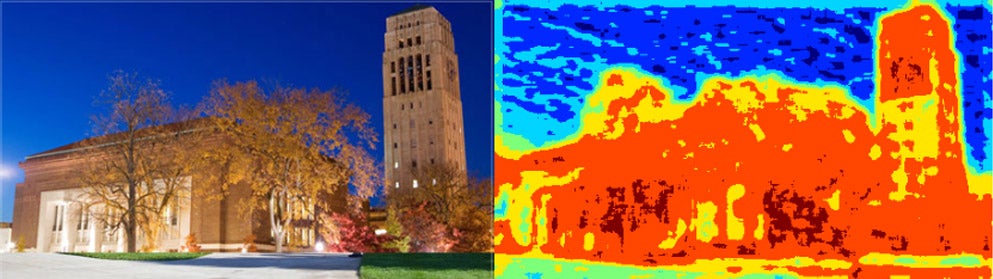

Building Vision Systems with Modern Machine Learning

JON SHLENS

Senior Research Scientist

Google Inc.

Vision appears easy for humans but has proven exceedingly difficult to solve with computers. This seeming paradox has highlighted the surprisingly rich mathematics required to understand the content of visual imagery. Recent advances in machine learning have altered this equation substantially and transformed our expectations of how computers understand the visual world. We are now able to build models that capture complex statistical relationships of visual imagery and exploit these models to automatically describe content — if not synthesize visual content de novo. In this talk I will describe selected research projects of myself and colleagues that have built state-of-the-art models to address concrete problems in vision. In particular, I will focus on how our understanding of vision has been enriched by importing ideas from disparate fields including natural language processing and game theory. In each of these vignettes, my hope is to convey the excitement and potential for enriching our understanding of vision by leveraging modern advances in machine learning.

Nonequilibrium fluctuations, entropy production, and climate variability

JEFFREY B. WEISS

Department of Atmospheric and Oceanic Sciences

University of Colorado Boulder

The climate system is approximately in a nonequilibrium steady-state maintained by energy fluxes of incoming solar radiation and outgoing long wave radiation resulting in a net entropy production. Patterns of natural climate variability such as El-Niño, which result in significant human impacts, are manifestations of preferred nonequilibrium fluctuations within this steady-state. Observations of the Earth’s climate system are sufficient to build empirical models of the nonequilibrium properties of climate fluctuations, enabling quantification of the entropy production of climate variability. A theoretical thermodynamically-based understanding of climate variability has the potential to lead to better predictions of how climate variability will change under anthropogenic climate change.