Visual influences on auditory speech perception

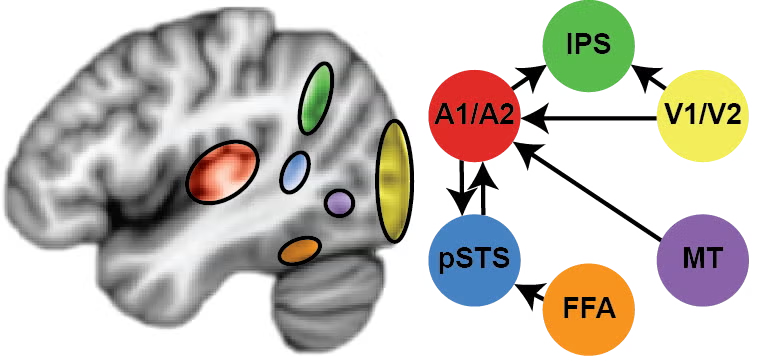

Multisensory research has demonstrated that observing lip movements is critical for phoneme acquisition during development as well as when auditory information is degraded by environmental noise or auditory deficits. This line of research is supported by our current NIH K99/R00 grant support. Our lab’s model of multisensory speech perception proposes that visual information facilitates speech processing through multiple distinct anatomical and functional networks integrating different types of information across the senses, including (1) the use of visual contextual information associated with auditory speech signals (e.g., the gender and identity of the speaker), (2) the use of preparatory lip motion predictive of the timing of phonemic onsets, (3) the use of lipreading information, as well as (4) the use of low-level arousal mechanisms.

Auditory influences on visual processing

Our lab investigates the mechanisms through which auditory information facilitates visual processes, with particular interest in identifying what information is relayed between the senses (and how this process can differ based on task or the neural mechanism). For example, hearing a sound can improve your ability to localize, identify, and respond to a visual object that appears at the same time. Is this due to the sound increasing levels of arousal or attention, or by giving us two chances to respond to the object (referred to as intersensory redundancy), or does it provide additional statistically relevant information about the object that helps us tune visual processing? We have evidence that all three processes are at work depending on the task. Most importantly, these effects get bigger when sensory damage occurs, highlighting this as an important protective and compensatory mechanism in the brain.

Auditory-evoked activity in auditory areas (top row) and visual areas (bottom row). Sounds were 1-second in duration. Both auditory and visual areas show onset and offset responses to the sounds. Adapted from Brang D, Plass J, Sherman A, Stacey WC, Wasade VS, Grabowecky M, Ahn E, Towle VL, Tao JX, Wu S, Issa NP, Suzuki S (in review). Visual cortex responds to transient sound changes without encoding complex auditory dynamics.

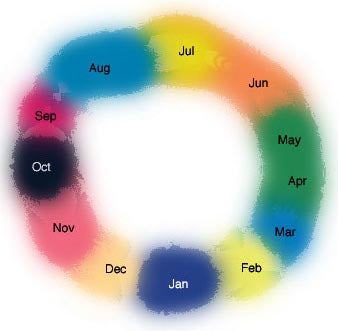

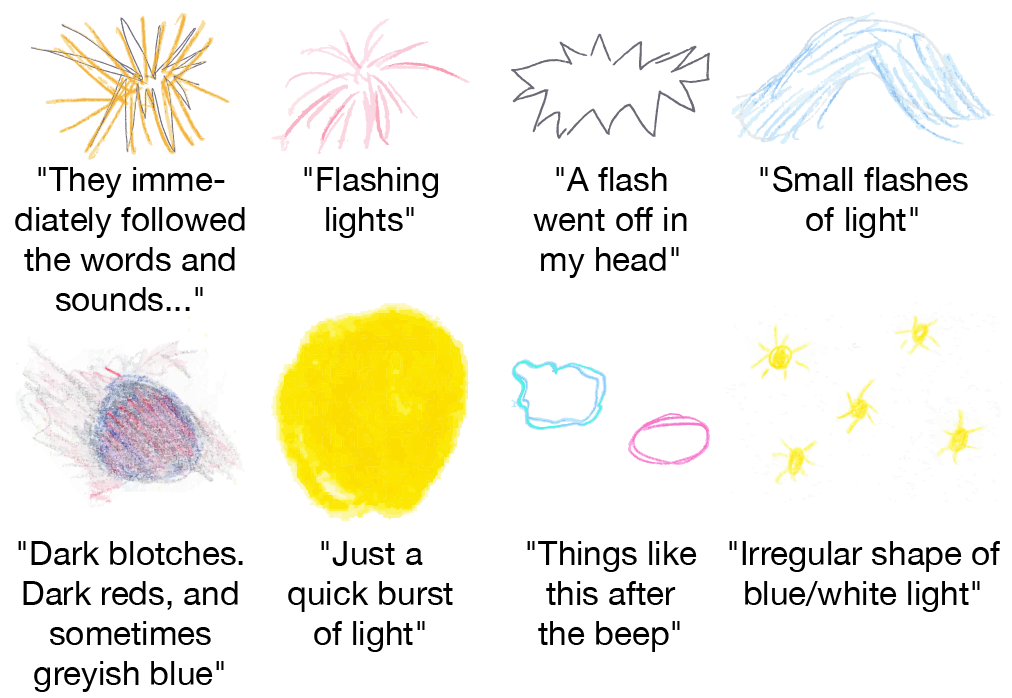

Sounds can evoke visual hallucinations following brief sensory deprivation. Representative depictions of the auditory-driven visual percepts drawn by non-synesthetes. Depictions are consistent with Klüver form constants. Adapted from Nair A, Brang D (2019). Inducing Synesthesia in Non-Synesthetes: Short-Term Visual Deprivation Facilitates Auditory-Evoked Visual Percepts. Consciousness and Cognition, 70, 70-79.

Understanding altered sensory and cognitive functions in patients with intrinsic brain tumors

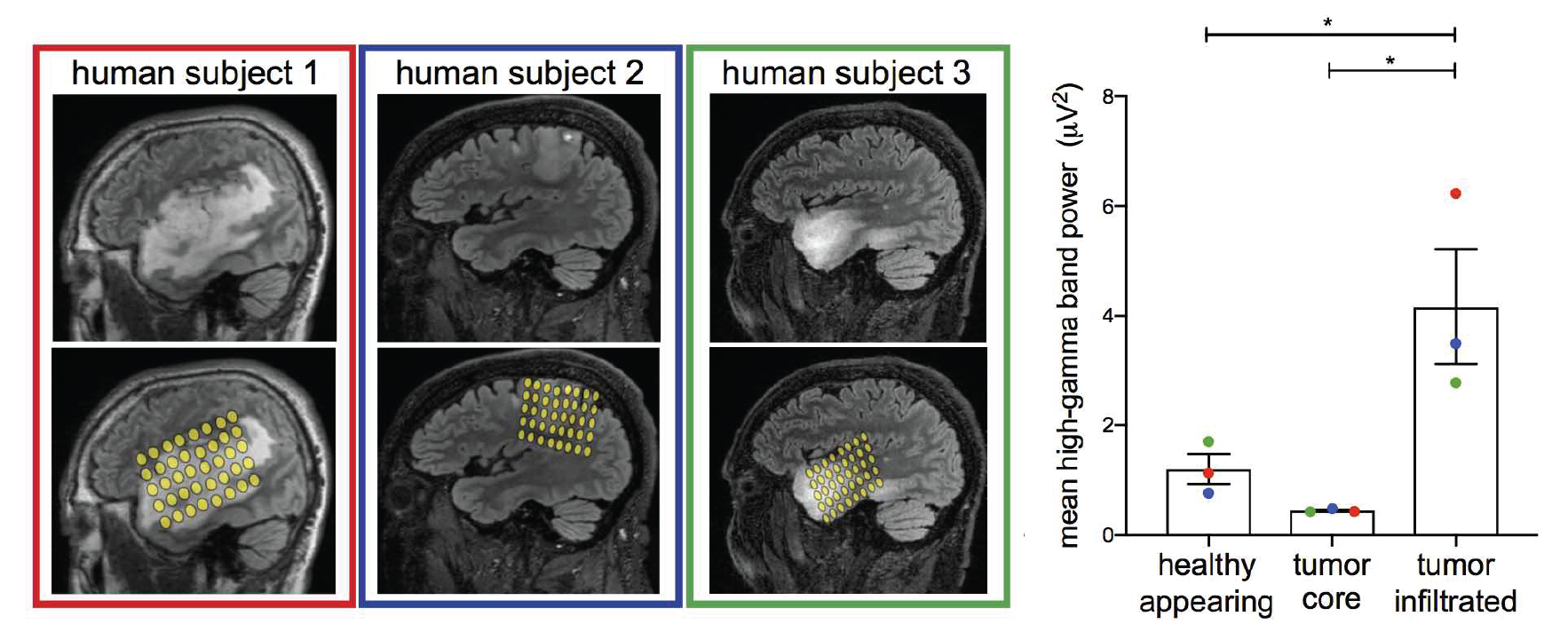

In collaboration with Shawn Hervey-Jumper’s lab at the University of California, San Francisco hospital, we are working to improve functional outcomes following brain surgery. This research program seeks to broaden cognitive mapping that occurs in the operating room (with the goal of minimizing cognitive deficits after surgery) beyond language and motor functions to other critical cognitive processes including sensory integration and attention. Using electrocorticography (ECoG), we are developing tools to allow the quick assessment of the functional responses from a broad range of cortical areas without requiring overt responses (two significant limitations of current cortical stimulation mapping approaches). As part of this program, we are longitudinally testing intrinsic brain tumor patients on a battery of cognitive and perceptual tests to study clinical outcomes as well as basic research questions. For example, where does brain damage most strongly affect cognitive processes such as listening, talking, or maintaining attention? Critically, if these areas are damaged, can they recover through neural plasticity?

ECoG recorded over brain tumors showing heightened neural firing over tumor infiltrated areas. Adapted from Venkatesh HS, Morishita W, Geraghty AC, Silverbush D, Gillespie SM, Arzt M, Tam LT, Espenel C, Ponnuswami A, Ni L, Woo PJ, Taylor KR, Agarwal A, Regev A, Brang D, Vogel H, Hervey-Jumper S, Bergles D, Suvà ML, Malenka RC, Monje M (2019). Electrical and synaptic integration of glioma into neural circuits. Nature.

Synesthesia

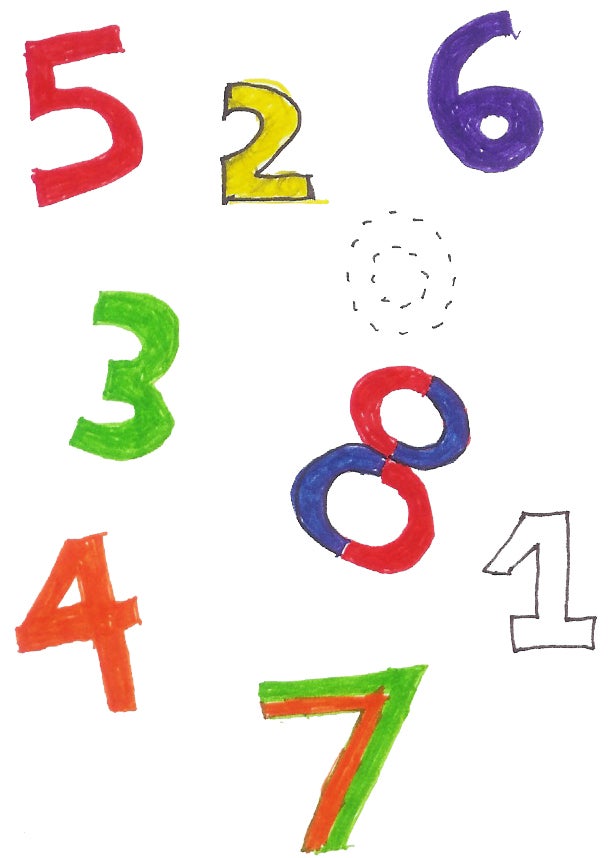

Synesthesia is a neurological phenomenon in which individuals are born with additional links between their senses. Some experience black numbers and letters as having colors (e.g., 2 may look blue or have the feeling that it should be red), or sounds may elicit colors or tastes — synesthesia can theoretically link up any two senses. These experiences are relatively common (2-4% of the population have one or more forms) and possessing synesthesia is not associated with any disorders.

We are currently conducting research on the relationship between synesthesia and more typical multisensory experiences. If you believe you may have synesthesia and are interested in participating in research, please contact us at djbrang@umich.edu