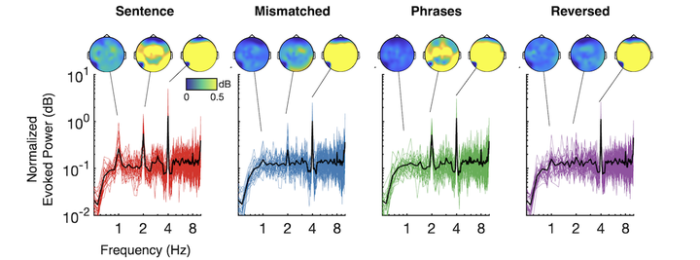

A team led by Chia-wen Lo (PhD 2021, now at MPI Human Cognitive and Brain Sciences) has a new paper in Neurobiology of Language. Together with Tzu-yun Tung (4th year PhD student) and Alan Hezao Ke (PhD 2019), this paper tests whether previously observed patterns of low-frequency neural synchrony to timed speech reflects lexical repetition or the computation of hierarchical structure. The key take-away is that timed speech (4 words/sec) that form grammatical sentences elicit rhythmic brain responses that match the rate of phrases (2/sec) and sentences (1/sec), but that pattern of synchrony disappears when the same words are swapped such that the lexical patterns repeat (e.g. 1 verb/sec, 2 noun/sec) but they no longer form sentences. Read on for more!

Lo, C.-W., Tung, T.-Y., Ke, A., & Brennan, J. R. (2022). Hierarchy, not lexical regularity, modulates low-frequency neural synchrony during language comprehension. Neurobiology of Language

doi: 10.1162/nol_a_00077

Abstract:

Read more: Paper: neural synchrony for structure, not lexical patterns

Neural responses appear to synchronize with sentence structure. However, researchers have debated whether this response in the delta band (0.5–3 Hz) really reflects hierarchical information, or simply lexical regularities. Computational simulations in which sentences are represented simply as sequences of high-dimensional numeric vectors that encode lexical information seem to give rise to power spectra similar to those observed for sentence synchronization, suggesting that sentence-level cortical tracking findings may reflect sequential lexical or part-of-speech information, and not necessarily hierarchical syntactic information. Using electroencephalography (EEG) data and the frequency-tagging paradigm, we develop a novel experimental condition to tease apart the predictions of the lexical and the hierarchical accounts of the attested low-frequency synchronization. Under a lexical model, synchronization should be observed even when words are reversed within their phrases (e.g. “sheep white grass eat” instead of “white sheep eat grass”), because the same lexical items are preserved at the same regular intervals. Critically, such stimuli are not syntactically well-formed, thus a hierarchical model does not predict synchronization of phrase- and sentence-level structure in the reversed phrase condition. Computational simulations confirm these diverging predictions. EEG data from N = 31 native speakers of Mandarin show robust delta synchronization to syntactically well-formed isochronous speech. Importantly, no such pattern is observed for reversed phrases, consistent with the hierarchical, but not the lexical, accounts.