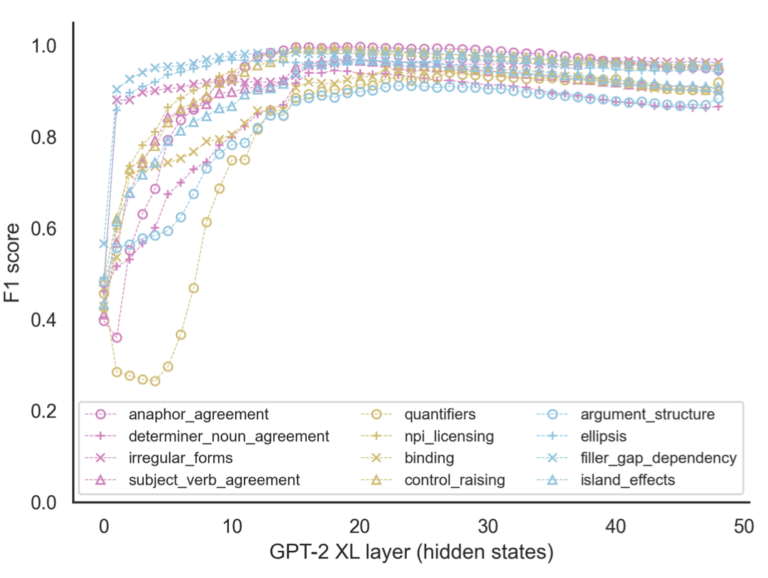

MA student Linyang He leads a team that advances probing methods for large language models by combining a linear decoder with the BLiMP large-scale benchmark of linguistic minimal pairs. The result is a probing method that isolate patterns of layer-wise activation that are sensitive to distinct linguistic phenomena. There is a lot to unpack in…

Category: Uncategorized

Dr. Tzu-yun Tung defends dissertation, on to Chicago!

Many congratulations to Tzu-yun who has successfully defended her dissertation Prediction and Memory Retrieval during Dependency Resolution. The work combines electroencephalography, syntax, cognitive psychology and computational modeling to characterize how predictive mechanisms interact with memory retrieval – through the guise of interference effects – during language comprehension . The first paper from the dissertation is…

Reproducing the Alice analyses

One of our projects last summer was to go back into the archives and dig out the code used for data analyses in all our papers published that use the Alice in Wonderland EEG datasets. That code has been tidied up and is now shared publicly: https://github.com/cnllab/alice-eeg-shared With this you can reproduce all analyses, figures,…