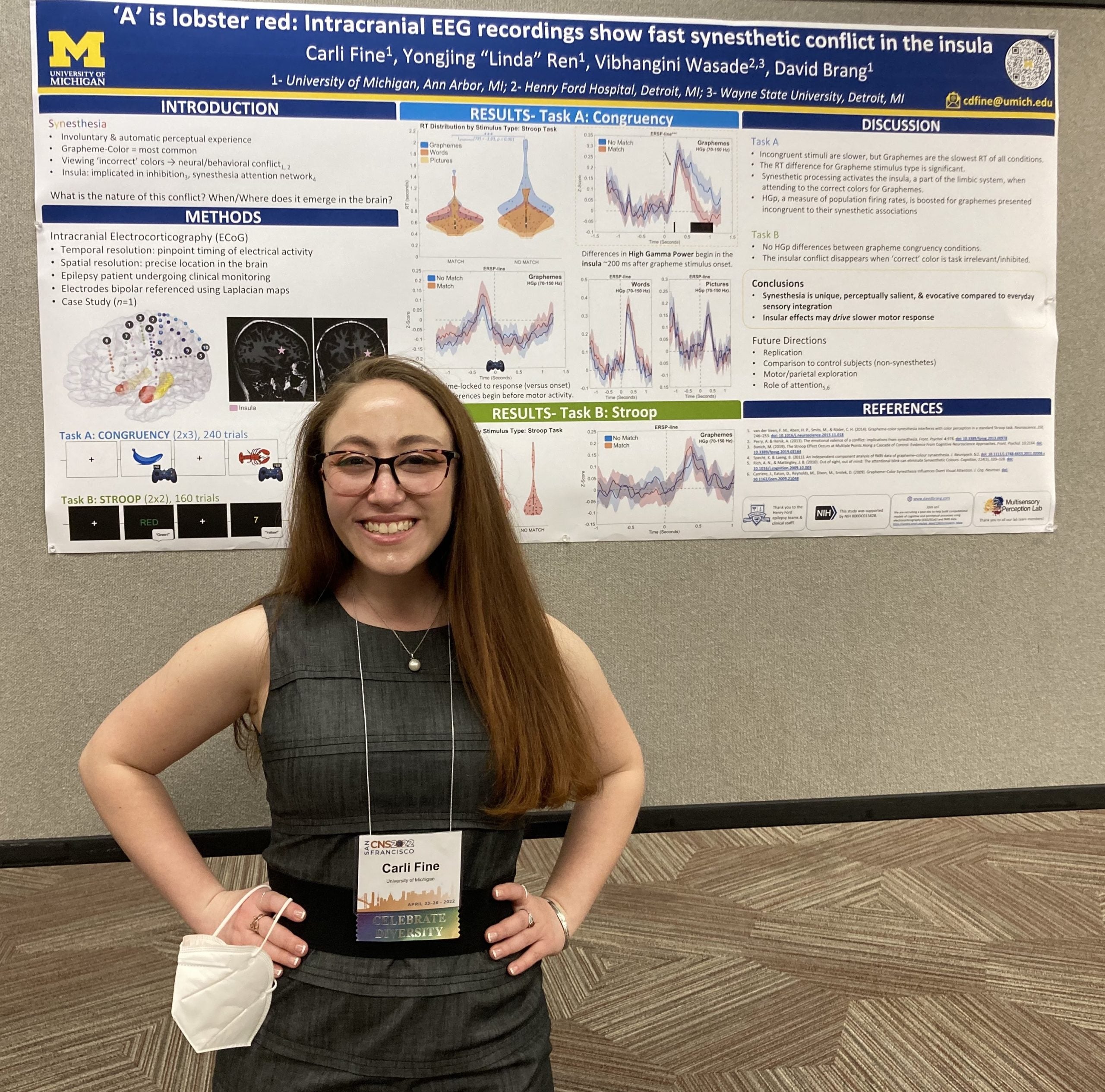

We had a wonderful time presenting at the Cognitive Neuroscience Society (CNS) 2022 Annual Meeting in sunny San Francisco, California. Zhewei (“Cody”), Carli, and recently graduated Dr. Karthik each had a poster presentation. Cody also spoke at a data blitz talk and earned a $10 award for keeping his slides to the allotted time limit.

Let us know if you would like to further engage with our work! #CNS2022