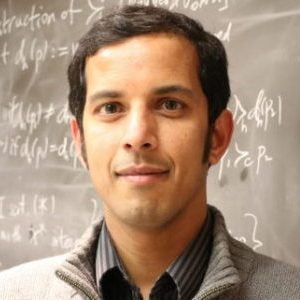

Keynote Speaker

Bin Yu

Professor of Statistics and EECS, University of California, Berkeley

About

Bin Yu is Chancellor’s Distinguished Professor and Class of 1936 Second Chair in the departments of statistics and EECS at UC Berkeley. She leads the Yu Group which consists of students and postdocs from Statistics and EECS. She was formally trained as a statistician, but her research extends beyond the realm of statistics. Together with her group, her work has leveraged new computational developments to solve important scientific problems by combining novel statistical machine learning approaches with the domain expertise of her many collaborators in neuroscience, genomics and precision medicine. She and her team develop relevant theory to understand random forests and deep learning for insight into and guidance for practice.

She is a member of the U.S. National Academy of Sciences and of the American Academy of Arts and Sciences. She is Past President of the Institute of Mathematical Statistics (IMS), Guggenheim Fellow, Tukey Memorial Lecturer of the Bernoulli Society, Rietz Lecturer of IMS, and a COPSS E. L. Scott prize winner. She holds an Honorary Doctorate from The University of Lausanne (UNIL), Faculty of Business and Economics, in Switzerland. She has recently served on the inaugural scientific advisory committee of the UK Turing Institute for Data Science and AI, and is serving on the editorial board of Proceedings of National Academy of Sciences (PNAS)

Personal website

Interpreting Deep Neural Networks towards Trustworthiness

Abstract

Recent deep learning models have achieved impressive predictive performance by learning complex functions of many variables, often at the cost of interpretability. In this talk, I will begin with a discussion on defining interpretable machine learning in general and describe our agglomerative contextual decomposition (ACD) method to interpret neural networks. ACD attributes importance to features and feature interactions for individual predictions and has brought insights to NLP/computer vision problems and can be used to directly improve generalization in interesting ways.

I will then focus on scientific interpretable machine learning. Building on ACD’s extension to the scientifically meaningful frequency domain, an adaptive wavelet distillation (AWD) interpretation method is developed. AWD is shown to be both outperforming deep neural networks and interpretable in two prediction problems from cosmology and cell biology. Finally, I will address the need to quality-control the entire data science life cycle to build any model for trustworthy interpretation and introduce our Predictabiltiy Computability Stability (PCS) framework for such a data science life cycle…

Time & location

Friday, March 11th, 2022 at 3pm

Michigan League Ballroom

Michigan Speaker

Vijay Subramanian

Associate Professor of Electrical Engineering and Computer Science, University of Michigan

About

Vijay Subramanian is an Associate Professor in the EECS Department at the University of Michigan since 2014. After graduating with his Ph.D. from UIUC in 1999, he did a few stints in industry, research institutes and universities in the US and Europe before his current position. His main research interests are in stochastic modeling, networks, applied probability and applied mathematics. A large portion of his past work has been on probabilistic analysis of communication networks, especially analysis of scheduling and routing algorithms. In the past he has also done some work with applications in information theory, mathematical immunology and coding of stochastic processes. His current research interests are on game theoretic and economic modeling of socio-technological systems and networks, reinforcement learning and the analysis of associated stochastic processes.

Personal website

Statistics in Action for Reinforcement Learning

Abstract

The use of sufficient statistics for learning, and parametric and non-parametric learning of models is common in statistics. In this talk we highlight the relevance and use of these methodologies in reinforcement learning, i.e., data-driven control of unknown stochastic dynamical systems.

We start by describing the use of sufficient statistics, particularly the notion of an information state and its relaxation to an approximate information state, to develop criteria for simpler representations of decentralized multi-agents systems that can be use to obtain close-to-optimal policies in a data-driven setting. Specifically, within the centralized training with distributed execution framework, we develop conditions that an approximate information state based simpler representation should satisfy so that low regret policies can be obtained. A key new feature that we highlight is the compression of actions of the agents (and accompanying contribution to regret) that occurs as a result of an approximate information state. This is joint work with Hsu Kao at the University of Michigan that is to be presented at AISTATS 2022.

If time permits, we will also discuss recent work on challenges in parametric and non-parametric learning based optimal control in stochastic dynamic systems using self-tuning adaptive control ideas. In this work, we study learning-based optimal admission control for a classical Erlang-B blocking system with unknown service rate, i.e., an M/M/k/k queueing system. At every job arrival, a dispatcher decides to assign the job to an available server or to block it. Every served job yields a fixed reward for the dispatcher, but it also results in a cost per unit time of service. Our goal is to design a dispatching policy that maximizes the long-term average reward for the dispatcher based on observing the arrival times and the state of the system at each arrival; critically, the dispatcher observes neither the service times nor departure times. We develop an asymptotically optimal learning based admission control policy and use it to show how the extreme contrast in the certainty equivalent optimal control policies in our problem leads to difficulties in learning; these are illustrated using our regret bounds for different parameter regimes. This is joint work with Saghar Adler at the University of Michigan and Mehrdad Moharrami at the University of Illinois at Urbana-Champaign.

Time & location

Thursday, March 10th, 2022 at 4pm

Michigan League Vandenberg